Introduction

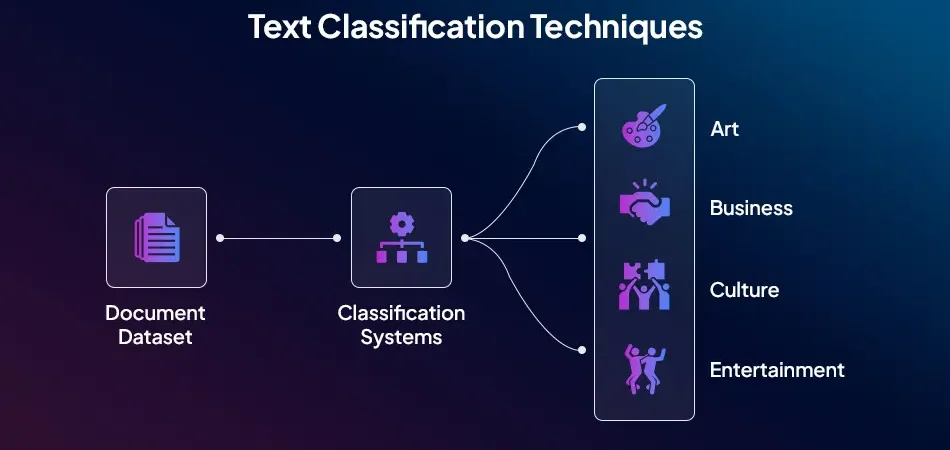

The current digital age requires immediate automated categorization methods because unstructured textual data continues to grow rapidly through emails and social media along with reviews and documents. Text Classification Techniques function as the essential method for this process.

Text classification demonstrates a broad variety of possibilities which include both spam detection functions and sentiment analysis capabilities together with topic labeling functions. A complete explanation of both classic and contemporary approaches used to develop advanced text categorization systems appears in this guide.

Text classification demonstrates a broad variety of possibilities which include both spam detection functions and sentiment analysis capabilities together with topic labeling functions. A complete explanation of both classic and contemporary approaches used to develop advanced text categorization systems appears in this guide.

What is Text Classification?

Text classification yields a mechanism which applies predefined categories or labels to textual information. An automated system uses text understanding capabilities to sort and manage content which enables tasks throughout document tagging and sentiment analysis and email filtering among others. The process of assigning predefined categories to text data through text classification improves both user experience along with operational efficiency in organizations.

Why Understand Different Techniques?

Various Text Classification Techniques need to be employed based on the desired application scenario. The complexity of the chosen model depends on project data size with small datasets using plain models alongside extensive NLP applications NLP applications requiring deep learning capabilities. Understanding the specific advantages and disadvantages between the different techniques allows users to make decisions that produce superior outcomes.

Traditional Machine Learning Techniques

Machine learning approaches from the past developed the fundamental foundation of textual categorization. These procedures work well in restricted data situations and fast prototyping situations because they maintain straightforward implementation and interpretation methods. Deep learning technology has grown in popularity, but traditional methods continue to produce significant results when used in practical applications.

Bag-of-Words (BoW)

Low-resource languages constitute the set of languages which do not have adequate labeled data to create strong NLP models. Digital representation remains a key issue for global communication since numerous languages have not yet obtained digital coding.

- Explanation: The BoW model transforms text into vector format through word counting operations which organizes documents as unsorted word collections.

- Feature Extraction: The text processing method starts with fragmenting text into separate words then develops one-of-a-kind vocabulary elements. Each recorded document is transformed into a vector to indicate word occurrence totals.

- Classifiers: Naive Bayes operates with Support Vector Machines (SVM) and Logistic Regression as well as other classification systems.

- Advantages: Such an approach features easy implementation with fast deployment and generates features which remain easy to understand.

- Disadvantages: The method discards information about grammar patterns together with word sequencing and semantic relationships in the text. Such approaches produce both highly sparse and high-dimensional spaces of features.

N-grams

- Explanation: N-grams build upon BoW through its ability to analyze series of words up to ‘n’ length which sustains contextual and syntactical detail.

- Feature Extraction: The method utilizes two- or three-word sequences in its features which leads to the creation of bigrams (2-grams) or trigrams (3-grams).

- Classifiers: The combination of SVM, Naive Bayes, ensemble methods work together with BoW or TF-IDF features.

- Advantages: The grouping of sequences through n-grams offers better contextual understanding to discern between phrases “not good” and “very good.”

- Disadvantages: The method escalates the number of features to levels that might produce overfitting and require greater processing time.

TF-IDF with Character N-grams

- Explanation: When combining Term Frequency-Inverse Document Frequency (TF-IDF) weight measures with character-level n-grams it becomes possible to analyze morphological differences between words.

- Feature Extraction: The analysis of character sequences (trigrams “ing” and “ion”) serves instead of word analysis to withstand spelling and typographic errors.

- Classifiers: Logistic Regression or Random Forest pairing serves with these text methods to produce better generalization results.

- Advantages: The model demonstrates strength against unstructured data while extracting word structures from languages featuring complex word forms.

- Disadvantages: This method holds restricted knowledge of semantic meaning while technical preprocessing creates noise avoidance standards.

Start Your Text Classification Project Today

Discover how our experts at Rapidise can help implement the best classification models tailored to your business.

Deep Learning Techniques

Through deep learning the process of natural language processing became more human-like since machines acquired advanced text interpretation capabilities. Through these Text Classification Techniques computers acquire hierarchical and contextual patterns without manual input from human beings.

Word Embeddings

- Explanation: The representation of words through dense vectors allows researchers to embed them into lower-dimensional continuous spaces. The developed vectors show semantic links because words with matching meanings end up nearer to one another throughout the vector domain.

- Popular Techniques: Three popular methods used to generate static embeddings from large corpora consist of Word2Vec, GloVe and FastText.

- Key Components: The word embedding system includes an embedding matrix which can be learned or pre-trained as well as a context window setting and training protocols that use skip-gram or CBOW.

- Advantages: Models benefit from these methods because they minimize the dimensions lower than sparse BoW vectors while preserving semantic relationships for better performance.

- Disadvantages: Static embedding systems provide a single vector value to words which hinders their functionality with words that possess multiple meanings.

Recurrent Neural Networks (RNNs)

- Explanation: RNNs operate with sequential data by storing information about previous input data therefore they are optimal for detecting relationships between words across sentences.

- Popular Techniques: LSTM and GRU networks operate as enhanced models which handle problems linked to vanishing gradients.

- Key Components: RNNs consist of memory cells as well as hidden states together with gates which are found in LSTM/GRU that manage sequence information propagation.

- Advantages: LSTM has proven its excellence in processing sequences when word arrangement plays an important role such as sentiment analysis and named entity recognition.

- Disadvantages: Long texts increase computational requirements and make parallelization complicated because these networks follow a sequential processing order.

Convolutional Neural Networks (CNNs)

- Explanation: The main purpose of CNNs was image processing but they work effectively with text by applying convolution filters to recognize nearby word groupings such as phrases.

- Popular Techniques: The TextCNN model represents a basic design which employs multiple filters to analyze word order from n-grams.

- Key Components: Multiple section types come together in this text analysis model including convolutional layers together with activation functions followed by pooling layers and finished with fully connected layers.

- Advantages: Training time is short while the length of input remains constant. It demonstrates excellence at performing binary tasks including the identification and categorization of spam emails as well as topic identification work.

- Disadvantages: The ability of CNNs to detect local features makes them strong yet their lack of long-range dependency modeling requires supplemental architectural improvements.

Transformers

- Explanation: The self-attention mechanisms in transformers allow processing of text sequences so they can identify relationships between words across the entire sequence thus improving context understanding during parallel computation.

- Popular Techniques: The transformer-based models BERT, RoBERTa, GPT, DistilBERT among others have gained popularity because they come pre-trained using large text collections.

- Key Components: The primary components of transformers include multi-head self-attention layers with positional encodings along with encoder-decoder stacks according to architectural needs.

- Advantages: Most NLP benchmarks demonstrate optimal results through fine-tuning mechanisms to help users perform transfer learning.

- Disadvantages: The models need demanding computational power while their complicated structure makes them operate as black-box systems.

Choosing the Right Technique

To develop a functional and efficient model the choice of the most efficient text classification technique from multiple available options proves essential. The best technical solution depends on both data type and project requirements together with resource constraints. The selection process requires assessment of several essential elements and general principles as described below. Complex NLP models trigger an increasing need for XAI systems to explain machine learning operations. XAI includes all methods that develop machine learning models to become understandable to human users.

Factors to Consider

Dataset Size

The combination of TF-IDF with Naive Bayes or Logistic Regression works optimally for small datasets because these models need minimum input data to achieve high performance.

Increasing dataset size enables deep learning models, particularly CNNs and Transformers, to extract sophisticated patterns in the data.

Increasing dataset size enables deep learning models, particularly CNNs and Transformers, to extract sophisticated patterns in the data.

Complexity of the Task

Basic processes such as spam identification together with language detection perform best with traditional methods.

The detection of emotions along with multi-label classification requires advanced deep learning models based on Transformers and RNNs to operate properly across diverse contexts.

The detection of emotions along with multi-label classification requires advanced deep learning models based on Transformers and RNNs to operate properly across diverse contexts.

Available Computational Resources

Businesses with restricted infrastructure systems generally implement basic approach types of BoW or TF-IDF.

Organizations having powerful hardware infrastructure should consider training BERT to achieve state-of-the-art performance levels.

Organizations having powerful hardware infrastructure should consider training BERT to achieve state-of-the-art performance levels.

Interpretability Requirements

The requirement to maintain project transparency (such as in healthcare and legal domains) makes Logistic Regression with BoW or TF-IDF better alternative choices.

The advanced accuracy of deep models remains challenging to decipher because they function as uninterpretable automatic systems.

The advanced accuracy of deep models remains challenging to decipher because they function as uninterpretable automatic systems.

Time Constraints

Need a quick deployment? Regular methods enable faster system implementation and require minimally complex training processes.

The project needs extended timelines together with optimal performance results? Deep learning models deliver superior outcomes although their installation setup and training process along with refinement stages take longer time periods.

The project needs extended timelines together with optimal performance results? Deep learning models deliver superior outcomes although their installation setup and training process along with refinement stages take longer time periods.

General Guidelines

For rapid prototyping and baselines:

Begin projects with Bag-of-Words or TF-IDF model platforms. With simple implementation these methods provide unexpected high-quality results when used for specific tasks.

For intermediate-scale applications:

Neural networks of simple design which include LSTM models or dense networks when used together with word embeddings maintain excellent semantic meaning interpretation while remaining resource-friendly.

For cutting-edge applications or when accuracy is critical:

The combination of state-of-the-art applications and accuracy requirements calls for Transformer models like BERT or RoBERTa. Pre-trained Transformer models BERT or RoBERTa should be used for the task. The models demonstrate exceptional performance levels particularly after they receive fine-tuning with domain-related data.

Explore Our AI & NLP Services

See how we deliver end-to-end NLP solutions using cutting-edge text classification techniques.

Evaluation Metrics

A thorough assessment of Text Classification Techniques’ performance enables users to determine their models’ level of usability. Using appropriate performance metrics verifies both the model’s high accuracy and its balanced and dependable performance across every category.

Accuracy

The simplest evaluation method is also commonly used in practice. The measurement establishes correct prediction ratios within the entire set of predicted instances. The usefulness of accuracy becomes misleading in datasets where one class exists in higher numbers.

Precision

Precision determines how many actual positive outcomes do exist within all positive predictions produced by the model. The metric determines how many actual positive cases exist in the subset of rated positive instances. False positives represent non-spam readings identified as spam in applications such as spam detection which proved costly.

Recall

Recall determines the fraction of authentic positive instances that a model correctly identifies. It functions similarly to sensitivity but bears the official name of true positive rate. Which proportion of actual real positives succeeded in our detection? The correct identification of positive instances becomes essential for applications that require severe consequences from missing fraudulent activities.

F1-Score

The F1-score calculates the balance between precision and recall through harmonic mean calculations. The single score works well when you want one metric that combines false positives with false negatives since it evaluates datasets with clashing values perfectly.

Confusion Matrix

The confusion matrix shows a full model performance evaluation through visual representation of TP, TN, FP and FN classifications. Here is a powerful graphic tool that shows at one glance how classes interact with each other during misclassification.

Area Under the ROC Curve (AUC)

AUC-ROC quantifies model classification performance as it separates different classes through multiple threshold criterion. The model performance increases when its AUC value rises. The method shows distinct value when dealing with two-class problems and for evaluating different classification techniques.

Conclusion

Text classification technology experiences advancements because of the ongoing progress in machine learning and deep learning fields. Organizations gain the capability to select optimal text classification solutions by studying the entire range of BoW to Transformer frameworks. Text classification shows unlimited promise because datasets expand alongside model advancements.