Introduction

The artificial intelligence field includes three important concepts which are Natural Language Processing

(NLP) together with Machine Learning (ML) and Deep Learning (DL). Each field exists independently with their specific functions, yet their methods differ from one another. Knowledge of the distinctions along with compatibility areas between Natural Language Processing and Machine Learning and Deep Learning remains important for all stakeholders involved in artificial intelligence technology.

This blog provides extensive analysis of the three concepts including the AI applications of their own with distinctions between them and their projected future AI developments.

Machine Learning (ML) Fundamentals

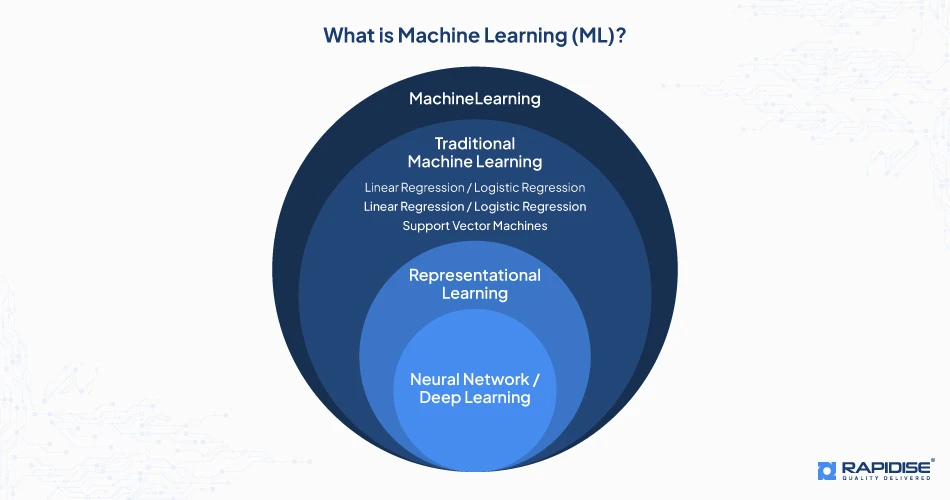

What is Machine Learning (ML)?

Machine Learning serves as an Artificial Intelligence (AI) subsection where computers acquire knowledge patterns through unprogrammed data processing to make decisions or form predictions. Machine learning operates differently than rule-based programs because it enhances itself through data evaluation to produce better outputs.

These systems acquire additional information to improve their accuracy levels thus becoming crucial resources for tasks including image recognition and fraud detection and recommendation system operations.

These systems acquire additional information to improve their accuracy levels thus becoming crucial resources for tasks including image recognition and fraud detection and recommendation system operations.

Core Concepts of ML

- Supervised Learning: The training process of supervised learning requires models to receive labeled data where each entry combines an input value with its appropriate response. Two examples of supervised ML applications include spam detection for emails as well as handwriting recognition systems. The trained model uses its mapped output to identify correct labels which it improves while processing new information.

- Unsupervised Learning: This model processing method provides unlabeled data which the system must analyze independently to discover inherent connections. Market segmentation of customer groups along with network security anomaly inspections are applications of this category of task.

- Reinforcement Learning: Reinforcement Learning prepares agents to select correct decisions through positive reinforcement for appropriate actions together with negative reinforcement for inappropriate moves. The training model operates in gaming AI along with robotics and autonomous systems because it learns continuously while interacting with its environment.

Basic Algorithms of ML

- Decision Trees arrange data through a tree structure that performs splits according to specific conditions for both classification and regression operations.

- The Support Vector Machines (SVM) represents a versatile supervised learning tool which determines the most ideal boundary to differentiate different classes in each dataset.

- Random Forest functions as an ensemble learning technique which produces various decision trees that unite their prediction outputs to achieve better performance and durable results.

- K-Nearest Neighbors (KNN) operates as a basic algorithm which selects new data points through determining their closeness to already known data points.

- Linear Regression provides continuous value predictions, yet Logistic Regression fulfills its purpose when used for binary classification tasks in spam detection and disease forecasting.

Applications of Machine Learning

- Fraud Detection: Financial institutions understand fraudulent transactions through ML algorithms by observing unusual activity in transaction patterns.

- Recommendation Systems: Through their operations Netflix and Amazon make use of Machine Learning technology to offer personalized recommendations of television shows and products and songs which users are likely to like.

- Predictive Maintenance: Companies in manufacturing alongside industries use ML technology to forecast equipment failures through the analysis of past operational records which subsequently decreases equipment downtime and maintenance expenses.

- Customer Segmentation: Companies use ML to organize customers into groups by combining their operational behavior with personal attributes and buying histories to direct strategic marketing programs.

Deep Learning (DL) Dive

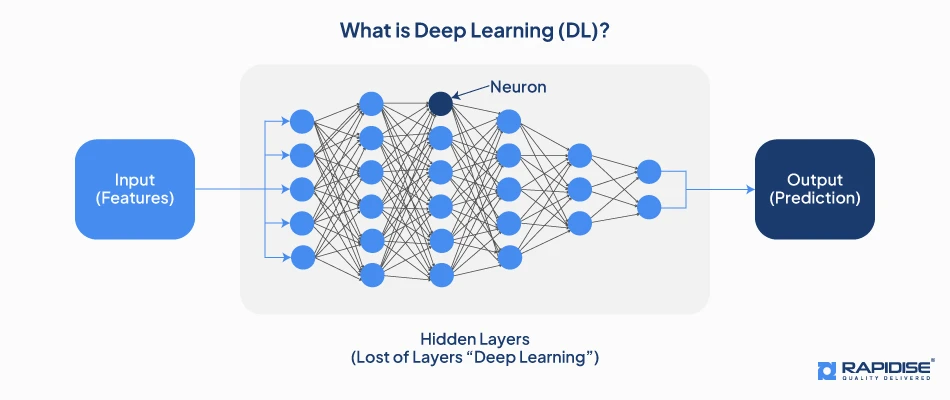

What is Deep Learning (DL)?

Deep Learning (DL) represents an advanced ML method which functions like the human brain through artificial neural networks (ANNs). A succession of multiple neural network layers comprises these deep artificial neural structures to extract complex data patterns through large-scale information inputs.

Deep Learning models perform automatic feature representation since they process raw material to create meaningful characteristics beyond human understanding thus achieving maximum effect in image recognition among other systems.

Deep Learning models perform automatic feature representation since they process raw material to create meaningful characteristics beyond human understanding thus achieving maximum effect in image recognition among other systems.

Core Concepts of DL

- Neural Networks: Beneath all Deep Learning operations exist artificial neural networks with three main components including an input layer and various hidden layers before ending with an output layer. The data moves from neuron to neuron across multiple layers where each element receives information through connection weights.

- Backpropagation: Weight adjustment through gradient descent represents the method to optimize an artificial neural network. For multiple learning iterations the model optimizes itself by reducing the difference between its predictions and actual values.

- Activation Functions: These features prevent linear relationships in the network enabling the detection of complex pattern relationships. Three major activation functions are ReLU (Rectified Linear Unit) and Sigmoid and Tanh where each function specifically controls neuron activation.

- Convolutional Neural Networks (CNNs): CNNs function specifically for image processing by applying filters which help find elements such as edges together with textures and objects in images. These applications depend on CNN functionality for completing facial recognition and medical image analysis.

- Recurrent Neural Networks (RNNs): RNNs enable sequential information processing particularly for time sequence forecasting and natural language processing applications. The preservation of long-range dependencies within sequences depends on LSTM and GRU networks being used together as variants.

Key Features of DL

- Ability to Handle Large-Scale Data: Large data populations serve as the cornerstone of Deep Learning because they enable top-quality performance across different application areas.

- High Computational Requirements: The training process of deep networks calls for significant computational power that only GPUs or TPUs can provide since traditional ML lacks this requirement.

- Works Best with Unstructured Data: DL models show superior performance in processing unorganized information such as pictures along with audio and textual data as well as structured data which ML models can handle.

- Feature Learning Automation: DL can automatically generate important features from unprocessed data to eliminate the requirement of human involvement in feature engineering.

Applications of Deep Learning

- Computer Vision: The wide range of DL applications consists of image classification together with object detection and facial recognition combined with medical imaging that enables security systems and healthcare practices and autonomous vehicles.

- Autonomous Vehicles: The self-driving car system depends on DL algorithms for processing sensors to identify road signs and detect hindrances before making instant driving choices.

- Speech Recognition: Different virtual assistant devices like Siri and Google Assistant and Alexa operate through DL-powered models to transform spoken language into text before responding.

- Medical Diagnostics: Through DL healthcare professionals can discover diseases earlier and assess medical images better as well as suggest tailored treatments which enhance patient results.

Discover AI Solutions for Your Business

Find out how NLP, Machine Learning, and Deep Learning could enhance your operations.

Natural Language Processing (NLP) Explained

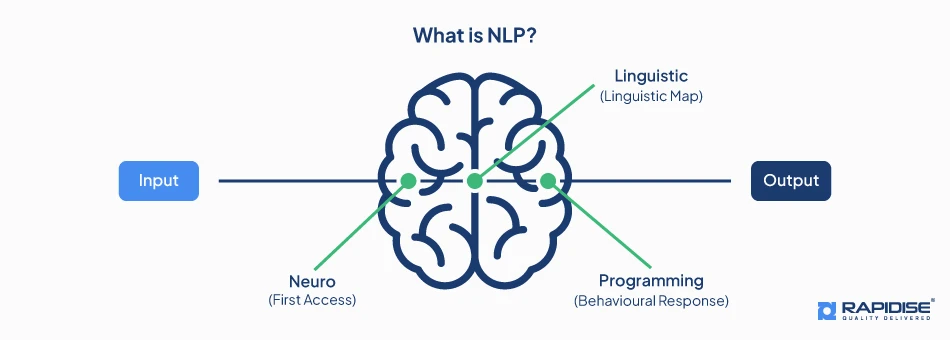

What is NLP?

Natural Language Processing (NLP) represents a specialized field of artificial intelligence (AI) which teaches machines to fulfill tasks related to human language processing that produces meaningful outcomes. Through this connection humans can send linguistic data and textual information to machine processors for analysis.

NLP exists as a key component of modern technology because it enables applications including chatbots together with speech recognition and sentiment analysis and machine translation systems.

NLP exists as a key component of modern technology because it enables applications including chatbots together with speech recognition and sentiment analysis and machine translation systems.

Core Concepts of NLP

- Syntax and Semantics: The grammatical structure of sentences is considered syntax, but semantics has to do with interpreting word and phrase definitions. The text generation and understanding features of NLP models capitalize on these textual elements.

- Tokenization and Stemming: Through tokenization NLP break textual information into small fragments which also includes stemming operations that turn complete words into their base forms such as “running” into “run”. These processing methods allow the efficient handling of big text databases.

- Named Entity Recognition (NER): Text analysis systems through this approach detect particular textual items including names and locations as well as dates and organizations. Many organizations utilize this method extensively for search engines and automated document analysis as well as chatbots.

- Part-of-Speech (POS) Tagging: A computational system identifies sentence words as nouns, verbs and adjectives to improve its understanding of sentence context.

Techniques and Algorithms of NLP

- Rule-Based NLP: Processing language with this method depends on set linguistic rules alongside dictionaries as its fundamental elements. The application excels at basic processing however it proves inadequate when dealing with unclear or complicated written content.

- Statistical NLP: The system implements learning systems that analyze language patterns through probability models for analysis. The system gains expertise through time by processing additional information which makes it valuable for text classification purposes and translation work.

- Neural NLP: Transformers modeling through BERT and GPT-3 purchases deep learning NLP to its highest potential by processing contextual understanding and producing natural language text output alongside functional conversational AI.

Applications of NLP

- Chatbots and Virtual Assistants: Natural Language Processing (NLP) framework allows artificial intelligence systems that form chatbots such as Siri, Alexa and Google Assistant to handle human conversations without artificiality.

- Sentiment Analysis: Through NLP business organizations examine customer reviews together with social media content along with product assessments to monitor public reception of their offerings.

- Machine Translation: NLP technology allows tools such as Google Translate to accomplish multilingual text translations which retain their original context.

- Text Summarization: Using NLP technology expands information availability through summary generation for long textual content such as documents and reports.

The Interplay: ML, DL, and NLP - Where They Overlap

ML as the Foundation

Machine Learning functions as main support for both Deep Learning systems and Natural Language Processing solutions. The framework of NLP depends on essential learning algorithms as well as techniques such as classification and clustering and regression. These algorithms are found in common use in NLP applications.

Traditional NLP models function with ML techniques because they include methods like decision trees combined with Naïve Bayes classifiers. This also includes the support vector machines (SVMs) to process text data.

The models perform functions that include spam detection together with document classification and sentiment analysis. Statistical and probabilistic methods are essential for NLP because these foundation elements lack in their ability to understand human language effectively.

Traditional NLP models function with ML techniques because they include methods like decision trees combined with Naïve Bayes classifiers. This also includes the support vector machines (SVMs) to process text data.

The models perform functions that include spam detection together with document classification and sentiment analysis. Statistical and probabilistic methods are essential for NLP because these foundation elements lack in their ability to understand human language effectively.

DL's Role in NLP

Deep Learning technology created a fundamental shift within NLP through its improvement of machine capabilities to imitate natural human writing skills. The standard practice of extensive feature engineering demands for traditional ML models does not apply to DL models because they learn significant textual features automatically from extensive datasets.

RNNs and LSTM networks work together to process sequences through time which makes them suitable for tasks that include speech recognition and text generation. BERT (Bidirectional Encoder Representations from Transformers) together with GPT (Generative Pre-trained Transformer) and other Transformer-based models have changed NLP through their powerful context-understanding abilities.

Developments in this technology field resulted in improved chatbot software and automatic content creation programs alongside machine translation systems.

RNNs and LSTM networks work together to process sequences through time which makes them suitable for tasks that include speech recognition and text generation. BERT (Bidirectional Encoder Representations from Transformers) together with GPT (Generative Pre-trained Transformer) and other Transformer-based models have changed NLP through their powerful context-understanding abilities.

Developments in this technology field resulted in improved chatbot software and automatic content creation programs alongside machine translation systems.

Specific Examples of Overlap

The fields of ML with DL and NLP create multiple practical applications in real-world environments.

- Speech Recognition: Siri and Alexa achieve text conversion from voice input through NLP models based on DL technology to interpret user requests and create dialogue responses.

- Chatbots and Conversational AI: The analysis of queries by customer support chatbots relies on ML-based classification methods which receive accuracy improvements from the NLU capabilities of DL.

- Sentiment Analysis: Business operations employ ML and DL models to understand customer opinions by scanning social media content and feedback records.

- Machine Translation: The service uses DL-based NLP models to deliver language translation services that achieve better precision rates than those provided by statistical methods.

Visual Representation

A visual representation of ML relationship with DL and NLP would display ML as the foundation supporting both DL and NLP where DL operates as an NLP enhancer. The representation would demonstrate that ML creates fundamental learning approaches, yet DL enhances linguistic understanding while NLP functions as the interface to let humans communicate with computers.

Get Expert AI Consultation

Need help implementing AI in your business? Connect with our experts today.

Key Differences: NLP vs. Machine Learning vs. Deep Learning

Data Requirements

The main distinction between NLP and Machine Learning and Deep Learning depends on the specific data requirements needed for efficient operation. The data requirements for ML models consist of either structured information that includes feature definitions and labels or semi-structured data types. DL requires massive unstructured information such as audio along with images and long textual resources to efficiently train its deep neural networks.

NLP operates with language-specific datasets composed of vast textual information such as books and articles along with speech transcripts and social media posts. The feature engineering capability of ML enables it to work with limited datasets, but DL-based NLP models need large data collections and intensive computing resources during their training process.

NLP operates with language-specific datasets composed of vast textual information such as books and articles along with speech transcripts and social media posts. The feature engineering capability of ML enables it to work with limited datasets, but DL-based NLP models need large data collections and intensive computing resources during their training process.

Complexity and Computation

These three fields exhibit major differences in their requirement amounts of computational complexity. Normal processors suffice to execute traditional ML algorithms including decision trees and logistic regression models because these algorithms maintain light computational needs. The computational demands of deep neural networks grow substantially therefore they need GPUs and TPU hardware for efficient large-scale calculation execution.

The computational requirements of NLP applications rest in the middle of traditional ML capabilities and DL resources because basic NLP tasks can use standard ML algorithms. But complex NLP requirements rely on deep learning architectures which handle text processing at high cost.

The computational requirements of NLP applications rest in the middle of traditional ML capabilities and DL resources because basic NLP tasks can use standard ML algorithms. But complex NLP requirements rely on deep learning architectures which handle text processing at high cost.

Problem Types

The various fields require different types of problems for solutions. ML finds its main applications in predictive modeling and fraud detection together with recommendation systems because structured data proves vital to these systems. Different pattern recognition requirements such as image recognition and natural speech processing and self-driving car navigation are DL’s main strength.

NLP provides specific solutions for language-based challenges such as text summarization in addition to handling sentiment analysis and chatbot development and automatic translation applications. The nature of problems and data types available determine which framework between ML, DL and NLP will be most suitable.

NLP provides specific solutions for language-based challenges such as text summarization in addition to handling sentiment analysis and chatbot development and automatic translation applications. The nature of problems and data types available determine which framework between ML, DL and NLP will be most suitable.

Practical Considerations

The selection between ML, DL and NLP algorithms during business development depends on three fundamental practical elements namely processing power and accessible data collections and analytical challenge level. ML models remain the first choice for fast data insights when working with structured data in financial institutions and healthcare facilities.

The powerful DL method requires major computational investments because it works best when applied to businesses that process massive amounts of unstructured information and need deep insight analysis such as speech recognition and computer vision applications.

Hence NLP functions optimally in cases where human-like interpretation of textual data is essential because it handles text-oriented applications including customer service automation and search engine operations. Organizations should start by evaluating their needs in order to pick the ideal AI strategy.

The powerful DL method requires major computational investments because it works best when applied to businesses that process massive amounts of unstructured information and need deep insight analysis such as speech recognition and computer vision applications.

Hence NLP functions optimally in cases where human-like interpretation of textual data is essential because it handles text-oriented applications including customer service automation and search engine operations. Organizations should start by evaluating their needs in order to pick the ideal AI strategy.

Future Trends and Impact: NLP vs. Machine Learning vs. Deep Learning

Emerging Trends

Continuous development of Artificial Intelligence technologies

through NLP and Machine Learning and Deep Learning advances the potential of automated intelligent systems. The GPT along with BERT despite being transformer-based models develop new capabilities to handle zero-shot and few-shot learning scenarios through performing tasks without requiring large training data sets.

Scholarly research on Deep Learning focuses on self-supervised learning so it can operate without big datasets which results in more affordable and efficient AI systems. The knowledge base of Machine Learning grows stronger by using federated learning because this method helps models work on multiple devices autonomously without requiring raw data transfer. The present trends show that forthcoming AI models will gain the ability to adjust while also maintaining high effectiveness for practical use.

Scholarly research on Deep Learning focuses on self-supervised learning so it can operate without big datasets which results in more affordable and efficient AI systems. The knowledge base of Machine Learning grows stronger by using federated learning because this method helps models work on multiple devices autonomously without requiring raw data transfer. The present trends show that forthcoming AI models will gain the ability to adjust while also maintaining high effectiveness for practical use.

Potential Impact

The union of NLP alongside Machine Learning and Deep Learning approaches prepares industries to generate massive-scale automated insights and artificial intelligence-driven solutions. Healthcare professionals will benefit from improved medical diagnostics through artificial intelligence which extracts essential patient information from clinical records and medical histories.

Intelligent chatbots together with conversational AI let customer service products provide better personalized interactions to their users. Financial institutions obtain benefits from AI-based fraud defense systems which learn new security risks continuously through their automated assessment methods. As technology advances business operations from multiple sectors can enhance work processes while implementing automated decision systems to achieve data-based innovative solutions.

Intelligent chatbots together with conversational AI let customer service products provide better personalized interactions to their users. Financial institutions obtain benefits from AI-based fraud defense systems which learn new security risks continuously through their automated assessment methods. As technology advances business operations from multiple sectors can enhance work processes while implementing automated decision systems to achieve data-based innovative solutions.

Ethical Considerations

The increased access to power requires companies to perform their duties while addressing the multiple ethical problems emerging from AI innovation. Hospitality service providers must handle an initial priority issue because AI models that learn from biased data can worsen societal discrimination.

Research teams and organizations face a key task to maintain the transparency and fairness within AI decision systems. The massive quantity of data needed for AI model improvements leads to major privacy concerns in relation to data protection.

GDPR regulations together with other policies encourage AI solution providers to meet data responsibility standards along with user permission requirements. AI solutions need proper responsible practices to make sure they operate ethically by accounting for security measures along with the need for explainability and accountability standards.

Research teams and organizations face a key task to maintain the transparency and fairness within AI decision systems. The massive quantity of data needed for AI model improvements leads to major privacy concerns in relation to data protection.

GDPR regulations together with other policies encourage AI solution providers to meet data responsibility standards along with user permission requirements. AI solutions need proper responsible practices to make sure they operate ethically by accounting for security measures along with the need for explainability and accountability standards.

Conclusion

The three essential components of AI consist of NLP along with Machine Learning and Deep Learning that perform individual functional roles. The basic functionality of ML serves as foundation for DL which strengthens superior complex AI applications and NLP enables AI systems to understand human language. Organizations that implement these technologies become better positioned to dominate automation operations as well as analytical applications and AI-driven system solutions.