Introduction

This blog presents an analysis of fundamental Edge AI Tools which cover model optimization frameworks as well as deployment and management solutions and hardware acceleration libraries with developmental platforms and security best practices and implementation approaches. The article shows how Rapidise supports businesses to develop Edge AI solutions.

Model Optimization Frameworks

The model optimization frameworks help developers shorten inference duration along with decreasing memory requirements to attain better performance efficiency. AI workloads function optimally on embedded systems and mobile devices and IoT applications through this methodology even with minimal impact on performance standards.

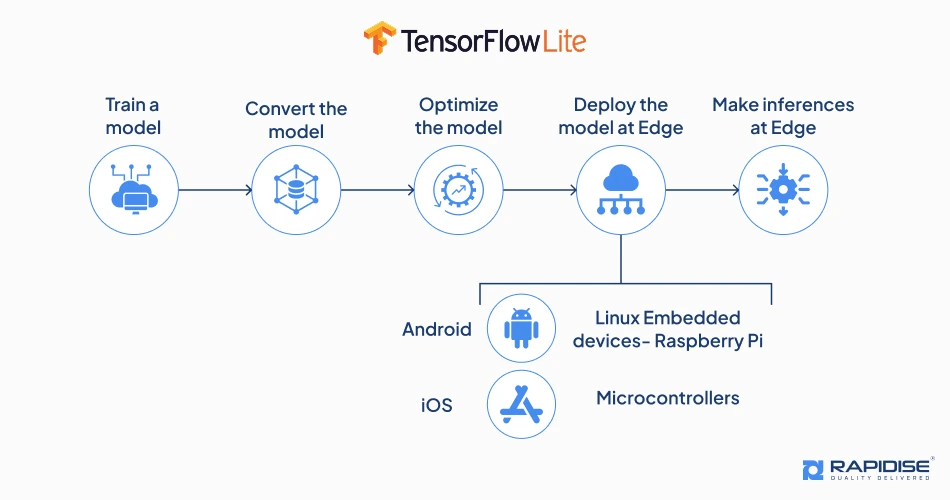

TensorFlow Lite

The built-in hardware acceleration feature of TensorFlow Lite enables its use of GPUs and NPUs when they become available on a device. The framework continues to be popular for mobile and embedded AI applications because it enjoys broad community backing along with support for different platforms.

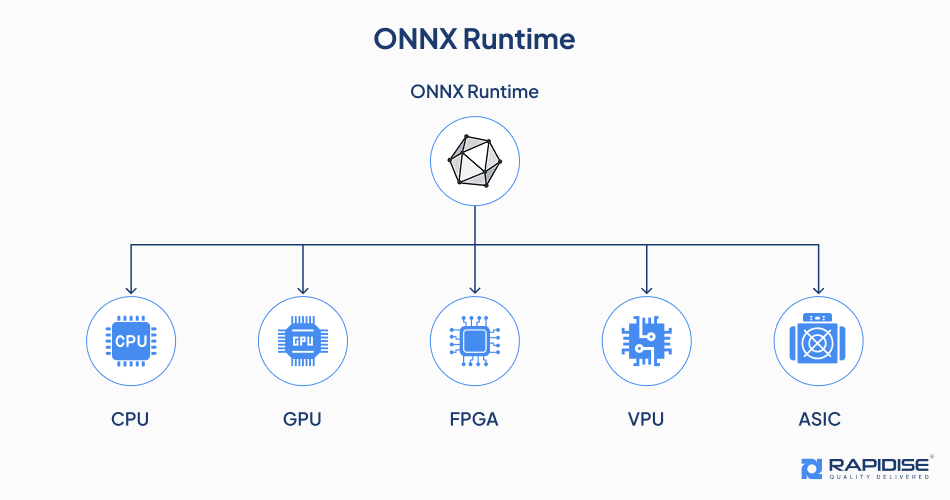

ONNX Runtime

AI performance improvement through these developments leads to advanced model functionality that works well at the edge. The ONNX Runtime maintains integration with NVIDIA TensorRT and Intel OpenVINO systems which give users improved performance across multiple edge devices.

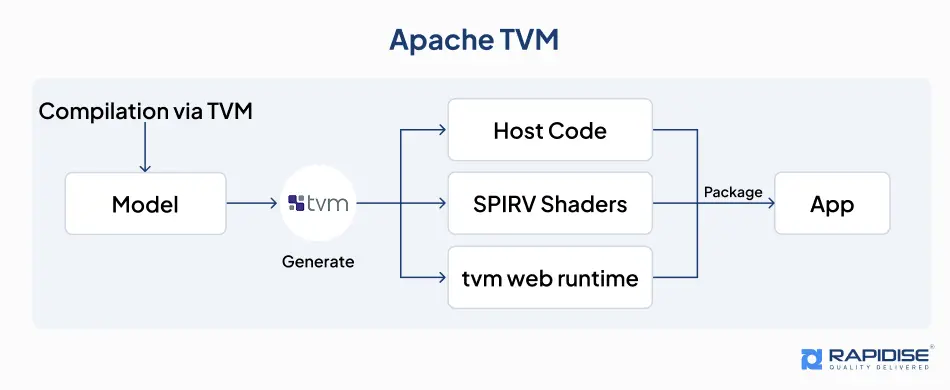

Apache TVM

Developers can easily deploy AI models to multiple edge devices through TVM by taking advantage of its code generation capabilities which provide optimal efficiency. The combination of performance features and flexibility in TVM makes it an outstanding tool for deep learning model optimization during edge computing operations.

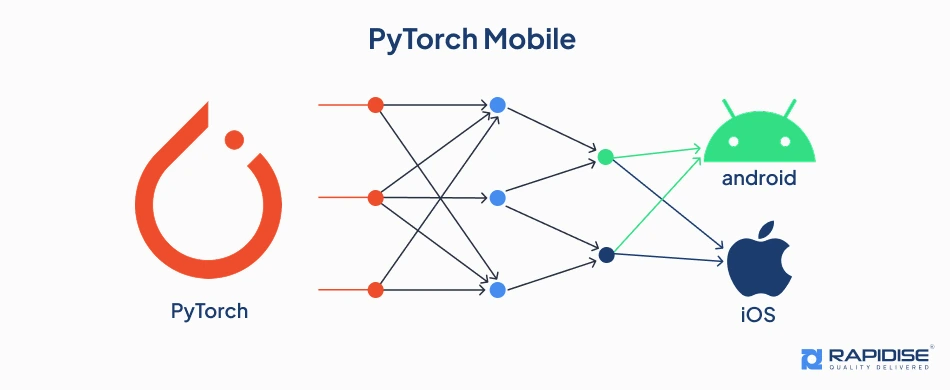

PyTorch Mobile

The mobile version of PyTorch allows developers to access hardware acceleration libraries that use Metal and Core ML on Apple devices thereby improving model efficiency. It offers developers an easy interface as well as dynamic computation capabilities that simplify mobile AI application deployment from research to commercial needs.

Paddle Lite

The AI processing becomes real-time through Paddle Lite because it offers three key features which include model compression together with hardware-aware quantization along with optimized inference engines. Paddle Lite serves as an outstanding choice for edge AI applications in smart devices and robotics and industrial automation because it possesses cross-platform capabilities and efficient resource management.

Deployment and Management Tools

A combination of proper development and management frameworks enables machine learning model distribution automation with optimized resources while maintaining regular performance between several edge devices. Through OTA technologies smart devices get the capability to receive automatic software updates along with security vulnerabilities fixes and operations maintenance services for extensive edge AI implementations.

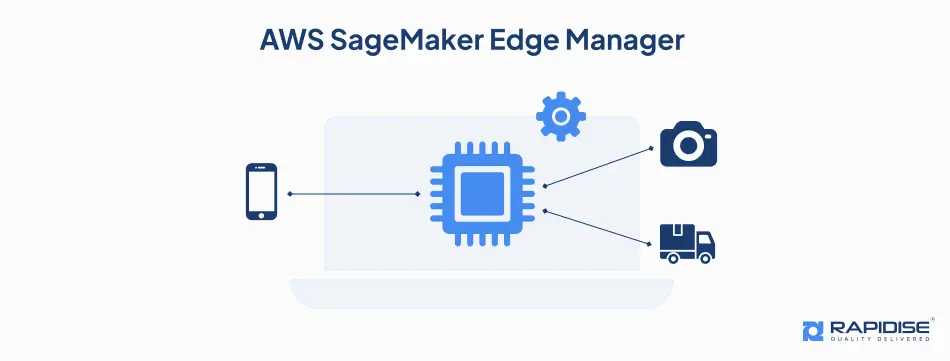

AWS SageMaker Edge Manager

Model compression techniques together with hardware acceleration capabilities provided by this tool enhance performance on limits of resources devices. Enterprises can rely on this solution because it features built-in security tools for model encryption as well as integrity verification functions.

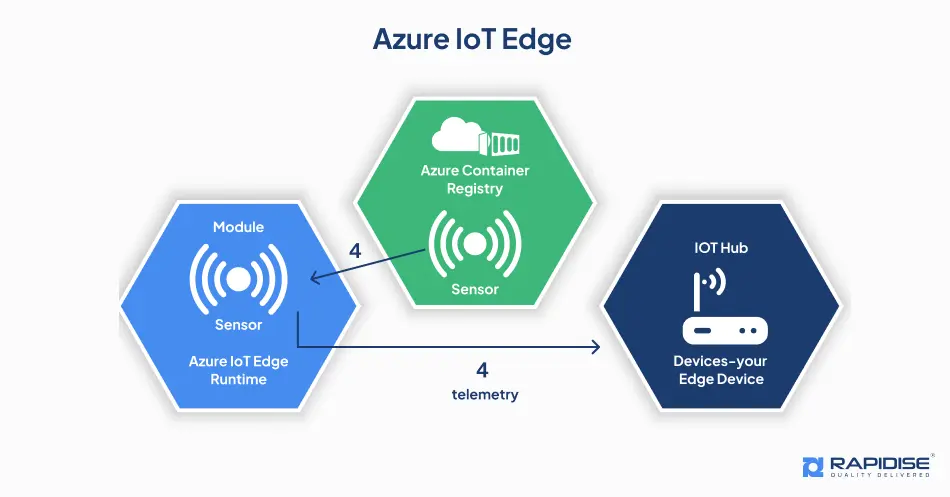

Azure IoT Edge

The integration between Azure IoT Edge and Microsoft AI along with analytics services delivers smooth operation of cloud and edge computing environments. The solution’s modular format provides scalability because companies can easily control and maintain AI functions which run between various platforms and equipment.

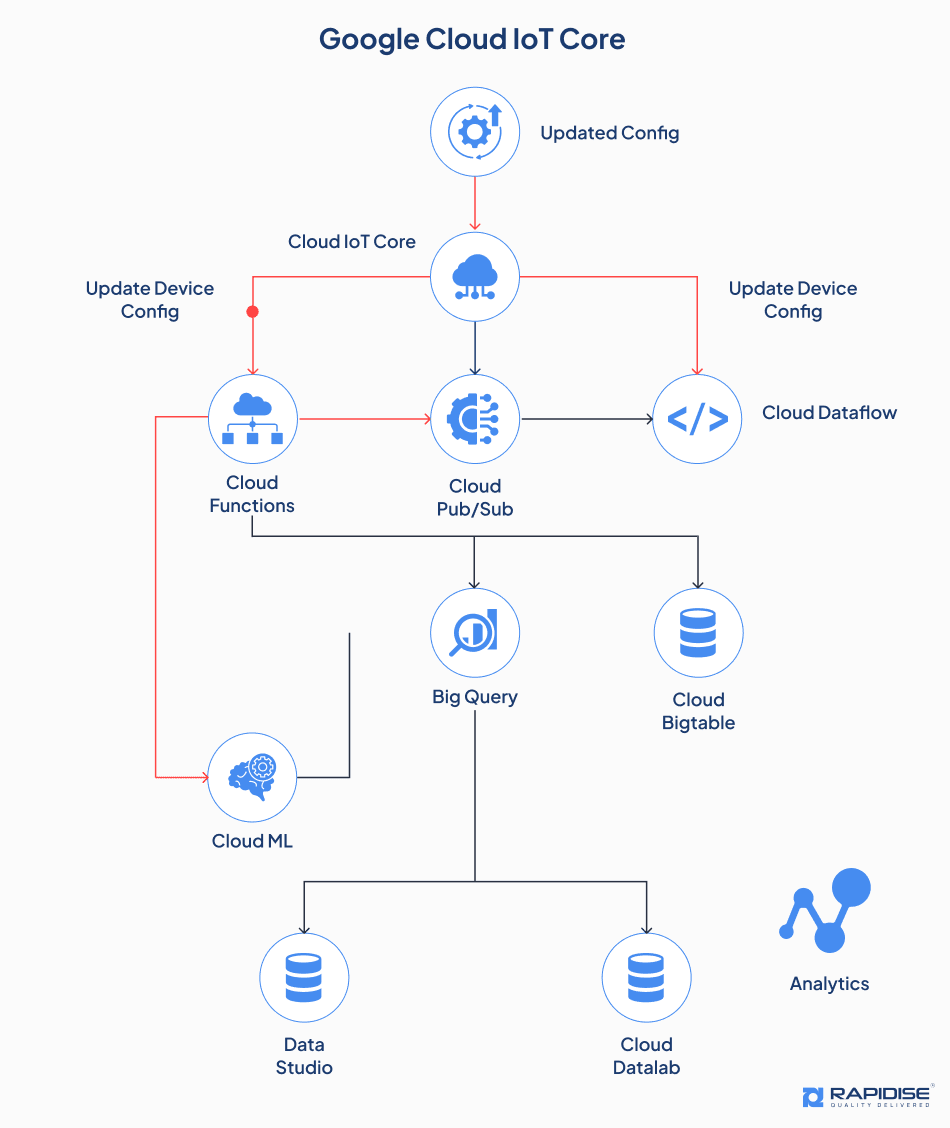

Google Cloud IoT Core

Google Cloud IoT Core allows integration with TensorFlow along with Edge TPU to optimize the inference process. The remote update features together with built-in authentication and device telemetry capabilities of Google Cloud IoT Core make it easy to handle AI model management throughout an IoT device network.

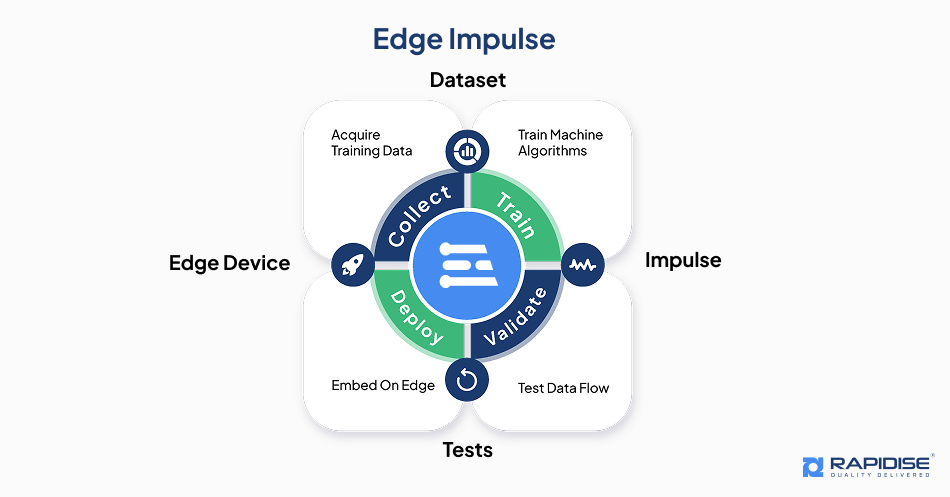

Edge Impulse

By providing data collection capabilities and training optimization options the platform allows users to develop efficient deployments both on microcontrollers and NPUs. Through its cloud interface Edge Impulse delivers a remote platform that lets users handle deployed models to enhance their AI applications continuously.

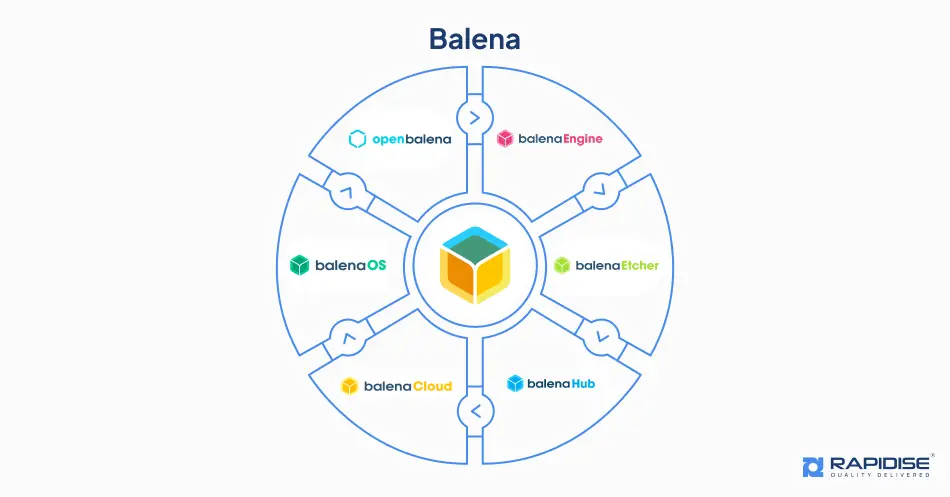

Balena

The Balena platform works with various hardware designs while offering remote device inspection with device wellness tracking together with fleet administration abilities. The platform bases its deployment system on containers because it makes AI work more flexible through scalable programming without hardware limitations.

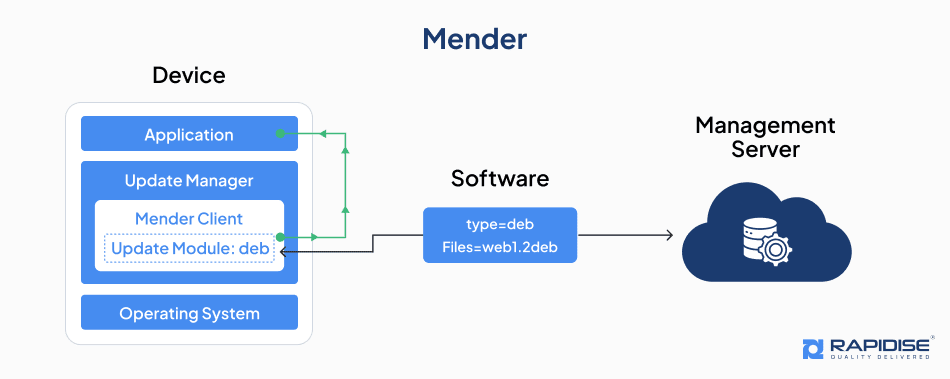

Mender

Mender provides edge AI application defense against unauthorized access and tampering through its combination of strong security features that use cryptographic signature verification protocols. The solution effectively supports IoT deployments at a scale by functioning in connected and intermittent network environments.

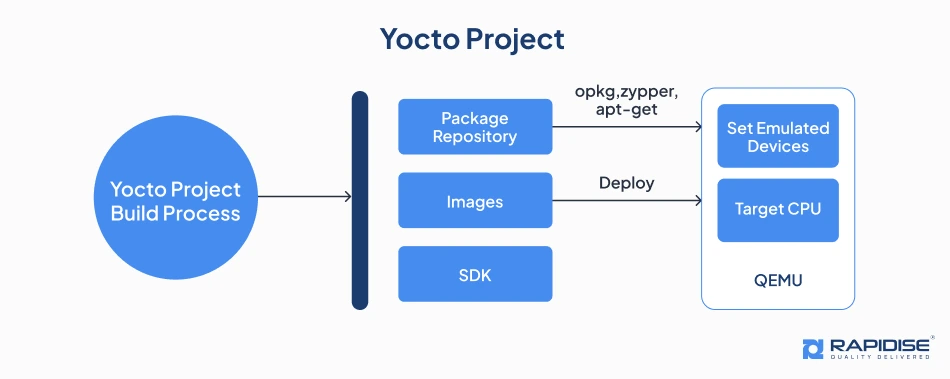

Yocto Project

Through its ability to let developers create software stacks tailored to hardware specifications the Yocto Project leads to more efficient model execution while discarding unnecessary system overhead. Its adaptable nature turns Yocto Project into the preferred tool for edge AI program applications in industrial automation together with robotics and automotive systems.

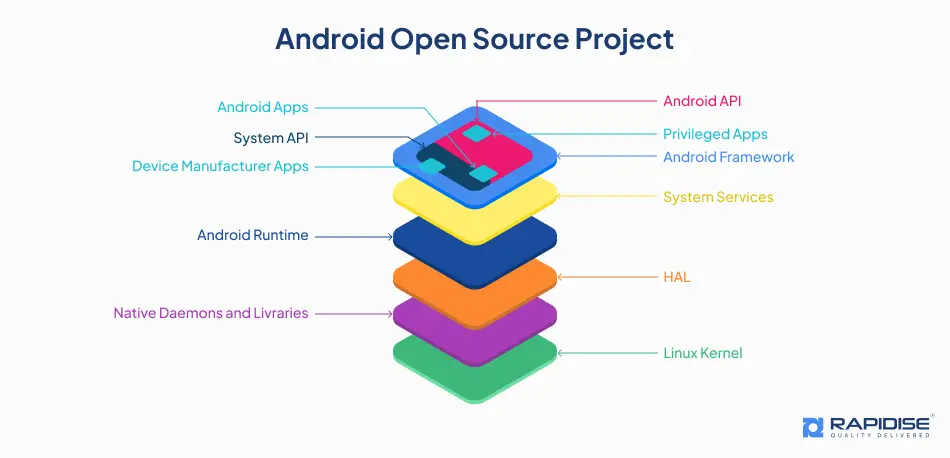

Android Open-Source Project (AOSP)

Within AOSP users gain access to multiple hardware acceleration libraries with NNAPI as a primary example of improving AI model execution on AI-chips. Builders extensively support the Android Open-Source Project as it continues to serve as a solid base for AI applications running through smartphones and smart appliances and automotive infotainment systems.

Hardware Acceleration Libraries and Frameworks

Specialized processors that include GPUs, TPUs along with FPGAs enable hardware acceleration frameworks through which the execution of models can be accelerated with reduced power demand. These tools shift the workload to optimized hardware platforms which allows real-time operation for systems such as autonomous driving platforms and industrial control systems as well as smart surveillance systems.

CUDA

Many AI applications that need high-performance inference deployment use CUDA as their programming framework for tasks involving computer vision and robotics. Complex computations run efficiently on this platform due to which NVIDIA-powered devices select it as their preferred option for edge AI deployments.

OpenCL

Loaded on heterogeneous platforms allows edge AI applications to make this a widely used solution in computing environments. The efficient workload distribution mechanisms along with memory optimization in OpenCL ensure regular operation of AI algorithms on low-power edge hardware.

Xilinx Vitis AI

The features of Vitis AI enable quick inference operation and power-effective performance that suit applications in autonomous driving systems and medical imaging and industrial automation processes. Model pruning along with compression capabilities exists in the framework to improve performance levels on edge platforms with reduced computing resources.

Development Platforms and Kits

Edge AI functions effectively through development platforms and kits which optimize hardware-software environments for AI model deployment. Such platforms include specialized processing units including GPUs and NPUs and FPGAs together with the capacity to speed up inference and cut down power requirements.

NVIDIA Jetson

NVIDIA Jetson provides three different models named Jetson Nano, Jetson Xavier NX, and Jetson AGX Orin which serve various AI processing requirements. Jetson devices achieve efficient AI model deployment through their integration of CUDA, TensorRT and DeepStream SDK components.

Qualcomm Chipsets (QCS6490, QCS610, QCS8550, QCS8125, QCS410, QCS625, QCS6125, QCS6225)

Embedded AI processing is located within devices so users can perform decisions swiftly without depending on cloud pipelines. Through Qualcomm Neural Processing SDK developers achieve smooth implementation of AI models onto their chipsets.

NXP Processors (i.MX 8M Plus Range of Processors)

The processors combine capabilities to perform voice recognition with image processing while detecting anomalies which provides excellent functionality for industrial automation applications and smart cities and auto industries. NXP delivers eIQ machine learning software coupled with development tools that simplify the process of deploying AI models onto embedded systems.

TI High End Processors (TDA4VM/TDA4VH)

Texas Instruments produces TDA4VM and TDA4VH SoCs which serve automotive industry and industrial edge AI applications with their optimization for high-performance computing requirements. The processors use deep learning accelerators together with vision processors and DSPs to perform advanced AI operations such as object recognition and sensor combination and real-time data assessment.

Raspberry Pi with AI Accelerators

AI development platforms have become less expensive through the combination of Raspberry Pi with Google Coral TPU and Intel Neural Compute Stick. Embedding machine learning models becomes possible thanks to this technology which allows developers to deploy minimal power-consuming prototypes on embedded devices. The Raspberry Pi platform integrates frameworks that enable AI applications to deliver effective performance on IoT solutions alongside smart home tools and educational implements.

Intel Movidius

Movidius serves as a major component for performing computer vision work including facial recognition together with object tracking and augmented reality duties. Developers benefit from deploying AI models using the Intel OpenVINO toolkit because this enables easy optimization for various embedded systems that include drones’ surveillance cameras and industrial robots.

Security Considerations for Edge AI

The security weakness of edge AI applications exposes them to attacks which result in distorted data manipulation together with unauthorized access and model theft processes that damage system security alongside user privacy protocols. Good security measures must be established to defend AI models alongside protecting data while maintaining reliable AI decision systems.

Threats

Data Poisoning

An autonomous vehicle running corrupted sensor data will result in recognition errors which generate unsafe vehicle operations. The prevention of data poisoning depends on strong data validation together with anomaly detection algorithms to get rid of unreliable input data.

Model Theft

Companies invested in proprietary AI models face severe risks due to theft of intellectual property because attackers might both abuse and re-sell stolen assets. Model encryption alongside obfuscation techniques together with secure enclave execution methods creates barriers to stop unauthorized model stealing.

Physical Attacks

Critical sectors such as healthcare, defense and finance must seriously address this situation with their AI applications because it poses substantial risks. Protective hardware elements along with secure startup functions and permission limits help organizations prevent these security hazards.

Side-channel Attacks

Since such attacks occur without needing contact between intruders and system software, they remain hard to identify. Multiple protecting solutions including power analysis-resistant hardware and noise injection and constant-time execution methods provide defenders ways to stop side-channel attacks.

Mitigation Strategies

Secure Boot

The cryptographic signature system provided by secure boot checks and confirms the operating system and firmware integrity thereby preventing unauthorized changes. The implementation of this protective security measure stands as a necessity for reliability maintenance of edge AI systems especially when used in IoT and industrial applications.

Data Encryption & Decryption

Mixed encryption standards ensure data protection including AES-256 security for data storage together with TLS security used for network transmissions. Using homomorphic encryption lets AI models execute operations on secured data while keeping the original information contents inaccessible.

Model Signing

AI models stay protected from attackers who seek to introduce malicious versions by using this authentication system. Secure distribution channels together with version control systems need to work alongside model signing protocols for achieving enhanced security measurements.

Keys Assignment

The distribution of cryptographic keys throughout edge networks becomes secure through hardware security modules (HSMs) together with trusted platform modules (TPMs). Standard procedures for rotating keys together with revoking policies serve to reduce potential harm from compromised authentication credentials.

Regular Updates

The use of over-the-air (OTA) updates enables organizations to execute security patch deployments together with model enhancements at the right time. Update transmissions and verification processes need secure mechanisms which stop attackers from inserting harmful modifications while the process happens.

Best Practices for Edge AI Development

Data Management

Efficient data storage can be achieved through three optimization techniques compression methods along with deduplication and noise reduction capabilities which support model accuracy levels. The protection of sensitive user information requires encryption together with anonymization procedures which serve to maintain data privacy particularly in financial and healthcare industries.

Model Selection

To minimize their size developers, need to deploy quantization along with pruning techniques that uphold model performance levels. The selection of operation-ready models for low-power hardware devices promotes seamless execution and enables longer battery life in mobile and IoT applications.

Testing and Validation

Testing methods that include simulations along with stress evaluations as well as actual field trials allow developers to locate future system breakdowns before their deployment. Transmission of AI applications remains accurate because A/B testing, and performance benchmarking procedures continuously monitor model predictions.

Monitoring and Maintenance

Alert systems which log data automatically track abnormalities so they can activate remedial steps. The implementation of an over-the-air (OTA) update system provides the ability to update both software and models remotely on edge AI devices which ensures continuous optimization and security with remote administration.

Lifecycle Management

A lifecycle management system should organize periodic retraining through fresh data through secure update procedures alongside methods for proper disposal of legacy models. The approach allows edge AI applications to maintain their precision and reliability throughout each stage of development from deployment through updates until decommissioning.

How Rapidise Help You in Edge AI Development?

Through TensorFlow Lite and ONNX Runtime combined with CUDA our team enhances model performance without causing computational expense increase. Our system includes lifecycle management alongside remote monitoring services that provide extended reliability benefits. Rapidise delivers state-of-the-art solutions through its platform that enables users to execute AI models, strengthen security measures and receive continuous updates for achieving edge-based innovation.